A self-driving car is capable of sensing its environment and navigating without human input. To accomplish this task, each vehicle is usually outfitted with a GPS unit, an inertial navigation system, and a range of sensors including laser rangefinders, radar, and video. The vehicle uses positional information from the GPS and inertial navigation system to localize itself and sensor data to refine its position estimate as well as to build a three-dimensional image of its environment.

Radar

Radar, or Radio Detection and Ranging, is what self-driving cars use to supplement higher resolution sensors when visibility is low, such as in a storm or at night.

Radar works by continuously emitting radio waves that reflect back to the source to provide information on the distance, direction and speed of objects. Although Radar is accurate in all visibility conditions and is relatively inexpensive, it does not have the most detailed information about the objects being detected.

LiDAR

LiDAR, or Light Detection and Ranging, is what self-driving cars use to model their surroundings and provide highly accurate geographical data in a 3D map.

Compared to Radar, LiDAR has much higher resolution. This is because LiDAR sensors emit lasers — instead of radio waves — to detect, track and map the car’s surroundings with data being transmitted at the speed of light, literally.

Unfortunately, laser beams do not perform as accurately in weather conditions such as snow, fog, smoke or smog.

But even a small object like a child’s ball rolling into the street can be recognized by LiDAR sensors. LiDAR not only tracks the ball’s position, but also the speed and direction, which allows the car to yield or stop if the object presents danger to passengers or pedestrians.

Cameras and computer vision

Cameras used in self-driving cars have the highest resolution of any sensor. The data processed by cameras and computer vision software can help identify edge-case scenarios and detailed information of the car’s surroundings.

All Tesla vehicles with autopilot capabilities, for example, have 8 external facing cameras which help them understand the world around their cars and train their models for future scenarios.

Unfortunately, cameras don’t work as well when visibility is low, such as in a storm, fog or even dense smog. Thankfully self-driving cars have been built with redundant systems to fall back on when one or more systems aren’t functioning properly.

Complementary sensors

Self-driving cars today also have hardware to enable GPS tracking, ultrasonic sensors for object detection, and IMU (inertial measurement unit) to measure the car’s velocity.

An often overlooked but important sensor for self-driving cars is a microphone to process audio information. This becomes vitally important when detecting the need to yield to an emergency vehicle or detecting a nearby accident that could be hazardous to the car.

Computation

In order for self-driving software to interface with the hardware components in real-time, processing all sensor data efficiently, it needs a computer with the processing power to handle this amount of data.

The computer chips in your standard computer or smartphone are known as Central Processing Units (CPU) but when you consider how much computational power is needed for a self driving car, a CPU does not have anywhere near the bandwidth to handle the number of operations — measured in GOPS, or giga (billion) operations per second.

Graphical Processing Units (GPU) have become the de facto chip for many self-driving car companies. But even GPUs are not the ideal solution when you consider how much data needs to be processed by autonomous vehicles.

Neural network accelerators (NNA), introduced in Tesla’s FSD chip in 2019, have far superior computing power for processing real-time data from the various cameras and sensors within their self-driving car.

According to Tesla, here is how these chips compare when processing the frames per second for 35 billion GOPS (giga operations per second):

- CPU: 1.5

- GPU: 17

- NNA: 2100

As you can see, Tesla’s NNAs are a breakthrough technology in self-driving car computation.

Software technology of self-driving cars

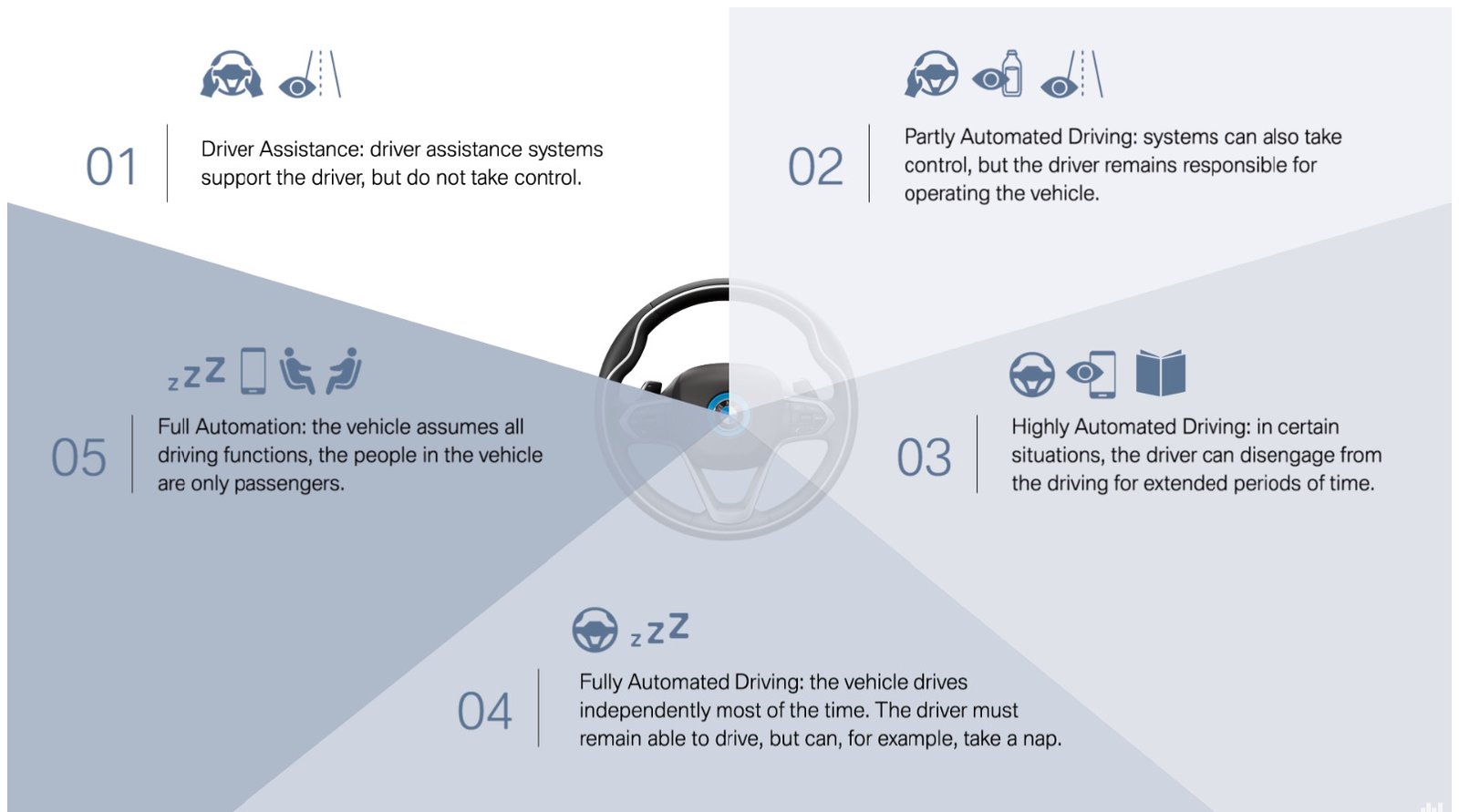

When self-driving cars reach Level 5 autonomy, they will almost certainly use a combination of three distinct components: hardware, data and neural network algorithms.

We’ve already touched on the hardware component, which is currently the one component with the most achievement. The algorithms and data components have a long way to come before we reach Level 5 autonomy.

Neural network algorithms

A neural network is a sophisticated algorithm based on complex matrices designed to recognize patterns without being programmed to do so specifically. Neural network algorithms are actually trained using the labeled data to become adept at analyzing dynamic situations and acting on their decisions.

Neural networks must be trained with data about the task they are expected to perform. When Google trains image recognition neural networks, for example, they must train the model with millions upon millions of labeled images.

Data

Data is one of the most important components for fully autonomous vehicles (Level 5) to become a reality.

Large amounts of data are the raw materials for deep learning models to become finished products, in this case, fully autonomous vehicles.

Tesla currently has the largest source of data with more than 400,000 vehicles on the road transmitting data from their fleet of sensors. By January 2019, Tesla had 1 billion miles of autopilot usage data. Compare this to Waymo who only passed 10 million autonomous miles by October 2018.

According to Rand, in order for an autonomous vehicle to demonstrate a higher level of reliability than humans, the autonomous technology would need to be 100% in control for 275 million miles before it can be proven safer than humans with a 95% confidence level.